04.6.13.01 deepseek-coder-6.7b-base-awq

Model Description

The @hf/thebloke/deepseek-coder-6.7b-base-awq model includes two nodes:

- deepseek-coder-6.7b-base-awq Prompt (preview)

- deepseek-coder-6.7b-base-awq With History (preview)

Model ID: @hf/thebloke/deepseek-coder-6.7b-base-awq. Deepseek Coder is a set of language models trained on large amounts of code and natural language in English and Chinese. Each model is trained from scratch on 2 trillion tokens, with 87% of the data being code and 13% being natural language.

The model is trained to perform tasks related to coding and programming. The main idea of using such a model is to provide developers with intelligent tools that facilitate and speed up the process of programming and working with code:

- Code autocompletion. The model can suggest appropriate continuations of lines of code based on context, helping to speed up the programming process.

- Code generation. The model is able to create code fragments taking into account the task, programming language and other conditions.

- Code understanding and analysis. The model can be used for interpretation and structural parsing of existing programs, revealing their peculiarities and regularities.

- Code refactoring and optimization. The model can suggest improvements and changes in the code to improve its quality, readability and performance.

Example of launching a node

A description of the node fields can be found here.

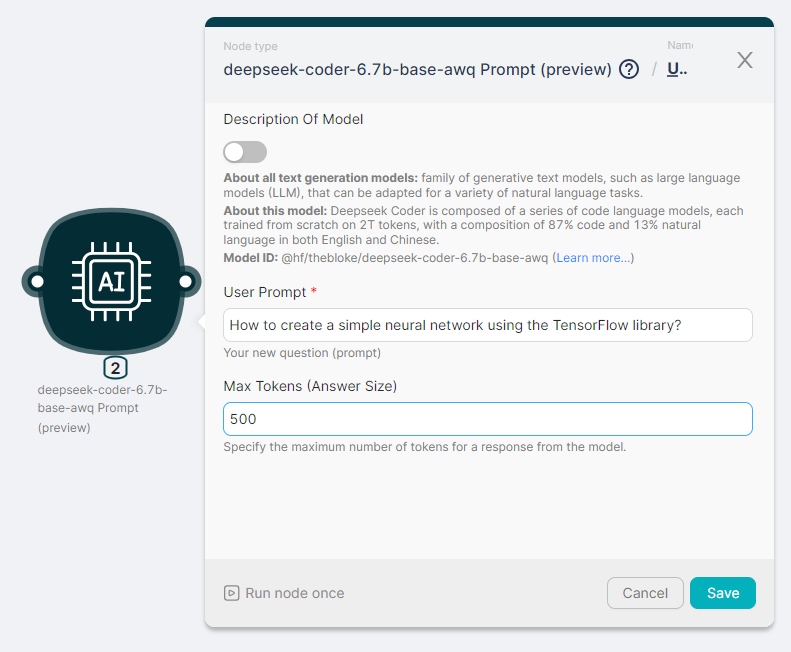

Let's run the deepseek-coder-6.7b-base-awq Prompt (preview) node to process text and generate a response with parameters:

- User Prompt - How to create a simple neural network using the TensorFlow library?

- Max Tokens (Answer Size) - 256.

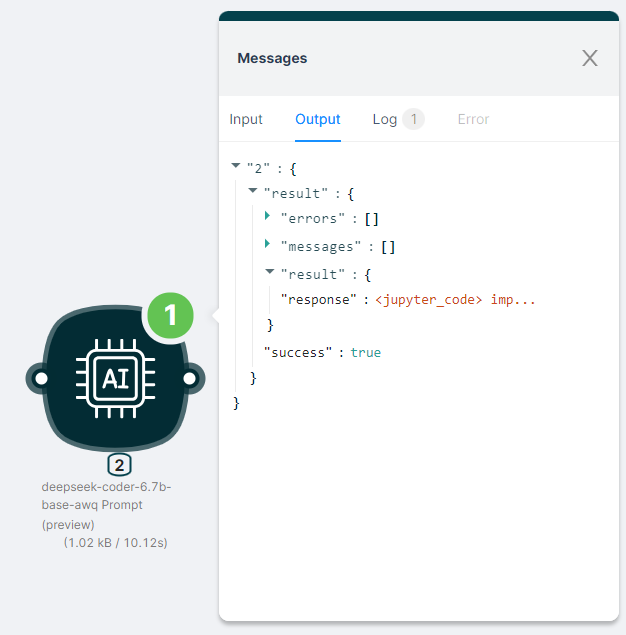

The output of the node execution is JSON:

- with a response to the

"response"request;

- with the status of the action

"success": true.

JSON

{

"result": {

"errors": [],

"messages": [],

"result": {

"response": "\n\n<jupyter_code>\nimport tensorflow as tf\nimport numpy as np\n\n# Define the input and output data\nx_train = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])\ny_train = np.array([[0], [1], [1], [0]])\n\n# Define the neural network architecture\nmodel = tf.keras.Sequential([\n tf.keras.layers.Dense(2, activation='sigmoid'),\n tf.keras.layers.Dense(1, activation='sigmoid')\n])\n\n# Compile the model\nmodel.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])\n\n# Train the model\nmodel.fit(x_train, y_train, epochs=1000, verbose=0)\n\n# Test the model\nx_test = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])\ny_test = np.array([[0], [1], [1], [0]])\nloss, accuracy = model.evaluate(x_test, y_test)\nprint('Test loss:', loss)\nprint('Test accuracy:', accuracy)\n<jupyter_output>\n4/4 [==============================] - 0s 2ms/step - loss: 0.0000e+00 - accuracy: 1.0000\nTest loss: 0.0\nTest accuracy: 1.0\n"

},

"success": true

}

}